dura.hnms.gr a.k.a www.cosmo-model.org

This presents dura.hnms.gr, the virtual machine that today acts as www.cosmo-model.org.

After a short history of its predecessors, it presents its hardware, the services it performs, the workload due these services and its users, and ends with an assessment of its current situation and a suggestion of a possible(?) near-future use.

The Cosmo web site exists since 2000. For most of its days, it was hosted on HNMS-owned machines. These were office desktops, way past their prime, being replaced by newer PCs. Hosting the Cosmo web site was their last mission before the junkyard.

This changed after 2016: the last two systems were bought with consortium's money and were modern and more than adequate for the modest needs of the Cosmo web and mail.

here is the history of the systems having acted as www.cosmo-model.org

| host name |

www since |

system notes |

|---|---|---|

| lunar | 2000 | an old SPARC workstation, running SUN Solaris, the last of its kind at HNMS |

| 2001 | a 486 office PC (my first system installation at HNMS and the first PC to run Linux here) | |

| 2005 | a Pentium4 PC (from a batch bought to use at forecasting during the 2003 pre-Olympics) | |

| 2007 | the last "lunar": a double-threaded 2.6GHz Xeon CPU with 1 GiB memory (a peripheral workstation of the superComputer bought in 2004) | |

| remora | 2016 | a 4GHz quad double-threaded core i7 with 32GiB memory, the 1st to be purchased with consortium money (1500 €) |

| dura | 2023 | the current system, a virtual machine running in a slim (1U) rack unit, bought with consortium money (5000€) on Dec 2021. The node resources dedicated exclusively to dura, are 16 2.6GHz Xeon cores, 128 GiB of memory and 800GiB disk space. The node is larger than dura. There are 12 more Xeons cores, 128 GiB of memory and 300GiB disk space reserved for future expansion and/or a second system (e.g. dedicated to file exchange). |

these are the VIRTUAL hardware characteristics of the system and some concerns afterwards:

CPU: Model name: Intel(R) Xeon(R) CPU E5-2697 v3 @ 2.60GHz

Socket(s): 1

Core(s) per socket: 16

Thread(s) per core: 1

CPU(s): 16

CPU MHz: 2599.998

On-line CPU(s) list: 0-15

NUMA node(s): 2

Vendor ID: GenuineIntel

Hypervisor vendor: VMware

L1d cache: 32 KiB

L1i cache: 32 KiB

L2 cache: 256 KiB

L3 cache: 35 MiB

NUMA node0 CPU(s): 0-7

NUMA node1 CPU(s): 8-15

Memory: RAM: total: 125.9 GiB used: 3 GiB (2.4%)

Array-1: capacity: N/A slots: 128 note: check EC: N/A

Device-1: NVD #0 size: No Module Installed

...

Array-2: capacity: 1.02 TiB note: est. slots: 64 EC: None

Device-1: Slot0 size: 16 GiB speed:

Device-2: Slot1 size: 16 GiB speed:

...

Device-8: Slot7 size: 16 GiB speed:

Drives: Local Storage: total: 800 GiB used: 58.06 GiB (7.3%)

ID-1: /dev/sda model: Virtual disk size: 200 GiB

ID-2: /dev/sdb model: Virtual disk size: 600 GiB

Graphics: Device-1: VMware SVGA II Adapter driver: vmwgfx v: 2.18.0.0

OpenGL: renderer: llvmpipe (LLVM 11.0.1 256 bits) v: 4.5 Mesa 20.3.5

concerns: virtual system ⇒ real+imaginary part ⇒ complex algebra

- Virtual CPUs are just threads on the hypervisor's physical CPUs, but HNMS IT people assured me that the physical CPUs are not over-subscribed; so the 16 single-thread CPUs reported in the listing, will map to 16 physical processing units.

The E5-2697v3 Xeons, reported as single-threaded above, physically are double-threaded (i.e. have 2 processing units each). What I don't know is if the physical cores have hyperthreading enabled or not. If not, dura's 16 virtual cores really correspond to 16 physical cores. Otherwise, it could be 8 cores with 2 processing units each.

If the latter is the case, two PUs in a single core are far from equivalent to two cores. Dura is an everyday system, so, very different from the ideal situation where calculations and IO are kept separate, each on its own PU. In short, the 8x2.6 GHz system is NOT much more powerful than its 4x4 GHz predecessor. Remember though, that there are 12 more Xeon cores in reserve.

- the 800GiB disk storage (and the 300GiB in reserve) is inferior to the ~1.5TB of the previous host. Raw disk space is larger, but in its RAID configuration the extra space is lost.

- the virtual CPUs are distributed into two NUMA nodes, yet all memory is reported in a single array and a 2nd appears empty. I hope all this memory is not placed behind a single real-hardware memory controller.

This is the software related to the web/mail services provided by dura:

| web services: |

|

| mail services: |

|

| specialized: |

|

| COSMO-specific: |

|

| dependencies: | MySql database server as storage backend of other installations (for Sympa, RequestTracker and FTEs accounting. Until recently for cosmo-model development history) |

login users

With the new system, the long needed shell-access became possible (via ssh). Until now, only three people asked for it and two received it: Felix Fundel for overlooking RFDBK installation and Flora Gofa for uploading verification input.

None has been really using it till now as this login history shows:

gofa pts/1 10.1.33.20 Wed Jul 5 10:03 - 12:23 (02:20)

gofa pts/0 10.1.33.20 Wed Jul 5 08:45 - 10:58 (02:12)

gofa pts/1 10.1.33.11 Wed Jul 5 08:42 - 08:42 (00:00)

gofa pts/1 10.1.33.11 Wed Jul 5 08:40 - 08:42 (00:01)

gofa pts/1 10.1.33.11 Wed Jul 5 08:38 - 08:40 (00:01)

fdbk pts/0 141.38.40.96 Mon May 22 06:41 - 09:01 (02:19)

fdbk pts/0 141.38.40.96 Mon May 22 06:41 - 06:41 (00:00)

fdbk pts/0 141.38.40.96 Mon May 22 06:38 - 06:41 (00:02)

system load

when not serving some web requests or mail transfers, the system is practically on sleep mode. This typical "top" listing shows that even large installations like mysql and sympa are up to 0.7% of CPU time:

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

311164 root 20 0 10312 3900 3124 R 1.0 0.0 0:00.91 top

60783 mysql 20 0 3677016 528560 39180 S 0.7 0.4 709:41.90 mysqld

69609 sympa 20 0 153472 107608 16532 S 0.7 0.1 86:53.72 sympa_msg.pl

1 root 20 0 166300 12276 7684 S 0.3 0.0 3:25.13 systemd

2 root 20 0 0 0 0 S 0.0 0.0 0:13.98 kthreadd

3 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 rcu_gp

The most time-consuming custom-program installed, is Harel's cloudOptics program. Even when running it, the 16 Xeons system CPU load won't go above 0.7%.

Asking for a page that ends up interacting with the mysql server won't go above 7% either.

Running a whole execution+visualization subsystem like RFDBK (disk-access+R processing+graphics+shinyServer interface) still stays at 0.7%:

311164 root 20 0 10312 3900 3124 R 1.0 0.0 0:03.44 top

617 root 20 0 934452 103992 35284 S 0.7 0.1 6:22.97 shiny-server

60783 mysql 20 0 3677016 528560 39180 S 0.7 0.4 709:44.11 mysqld

So, too many 0.7% usage slices are free to use!

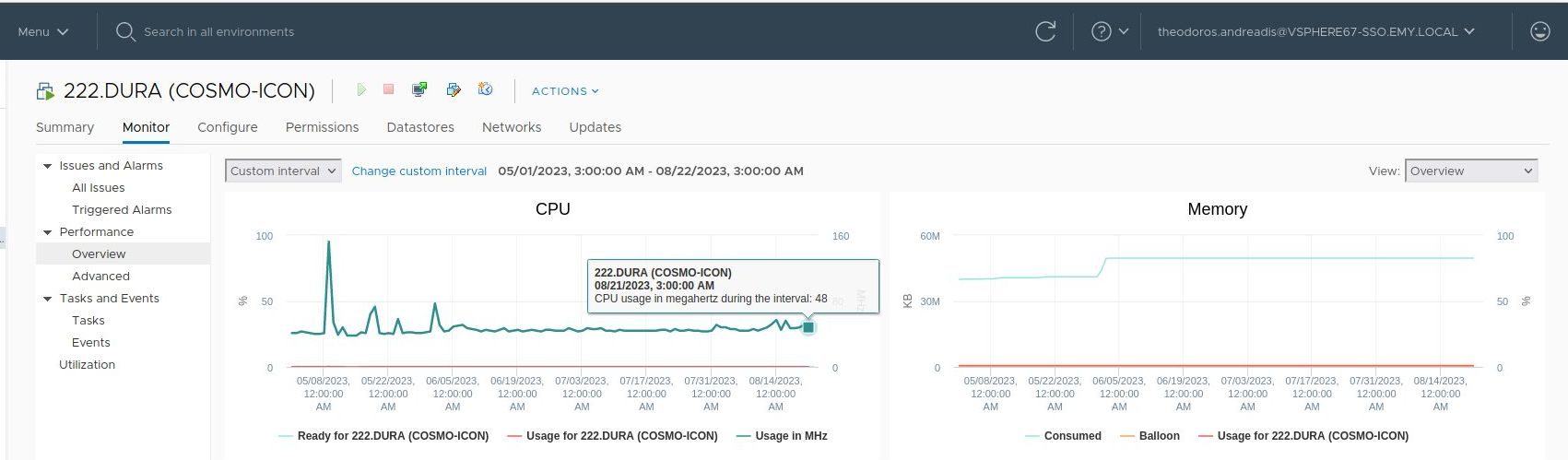

The virtual system is monitored by the VMWare software (VSphere) and its utilization history (Dec 1st to Aug 22nd) shows that after the initial installation/compilations flurry, the physical CPUs stay at ~25% of their nominal frequency; practically working at their idle-mode and the memory usage has flatlined.

So, in a nutshell, the Cosmo server is a fat lazy cat just blinking now and then.

The Consortium's 5000€ machine is (severely) underused and the 1500€ previous one would still be adequate. So, if you have in mind a new application that needs to be shared within he consortium and/or with the rest of the world, probably it fits computationally.

So we are open to new ideas and suggestions; and here is one, that (as far as I know) no one has tried before: instant model high-resolution demo!

Think about arranging for an ICON live run on the web, e.g. for 6 hours over a 1x1 degree area of 2km resolution, driven by global ICON, producing wind/temperature/precipitation files and charts, around any point a user will click on the globe. Would that be a nice presentation/testbed for potential new ICON users?

Theodore Andreadis (HNMS)

for COSMO 25th GM

September 2023