Until 2022, the Cosmo consortium members have been using the Cosmo-model for research and operational applications. A brief account of the model use within the consortium and their last working setups are kept. The Cosmo-model is still in use by licensees and supported external users.

The consortium switched from Cosmo-model to ICON (there was a priority project, C2I dedicated to that). Currently, not much about this operational transition shows in this site. Until there is enough, here is what is available what about it:

Besides universities or research institutes, which can use the COSMO-Model with a free research license, the model (Cosmo or Icon) can also be used by national weather services not belonging to the COSMO consortium, using analysis and boundary conditions from Icon, produced and distributed by DWD. The service is free for developing countries; others have to pay a yearly support fee of 20.000€

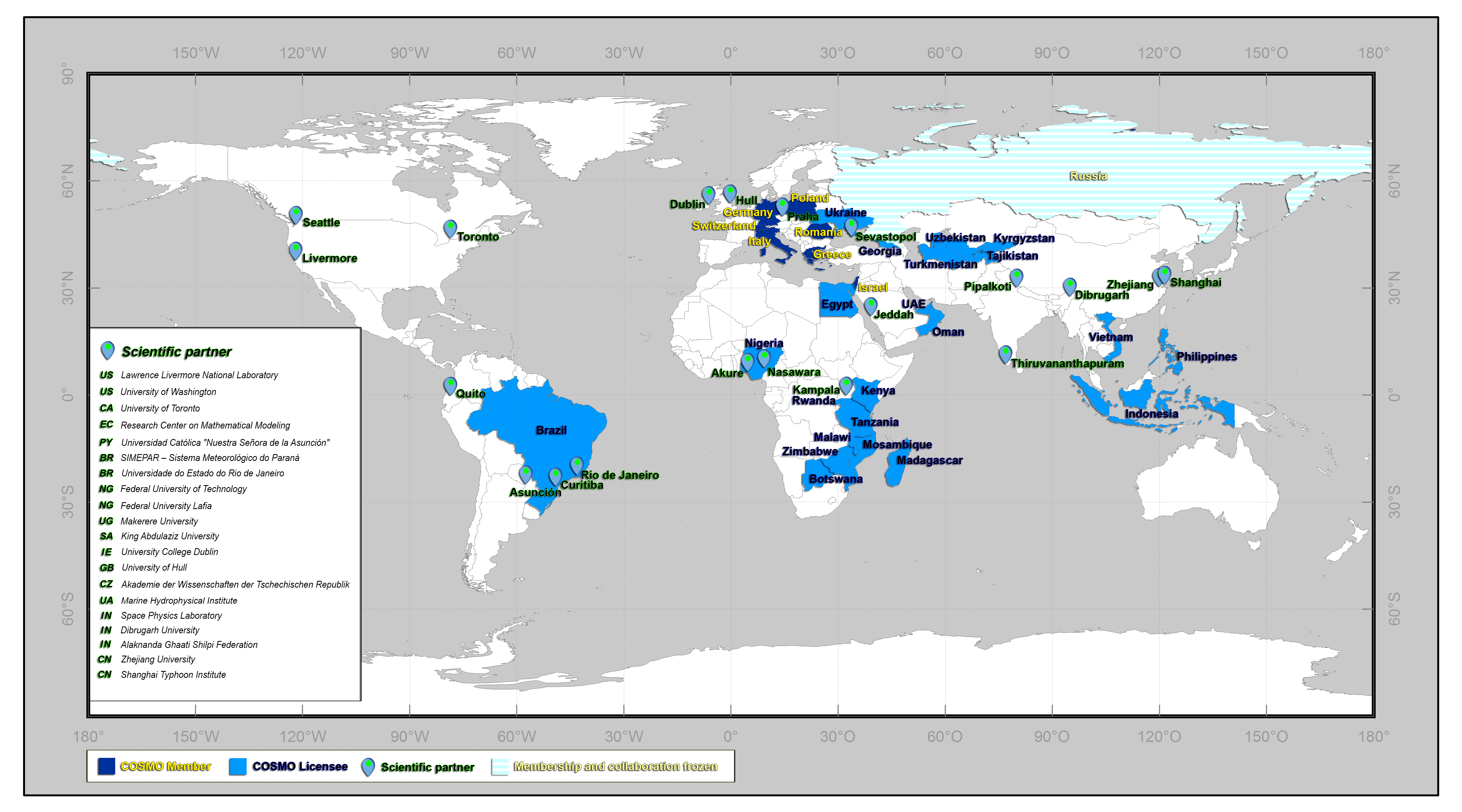

Figure 1: Countries where the national weather service or a research institute is using the cosmo-model or switching to Icon.