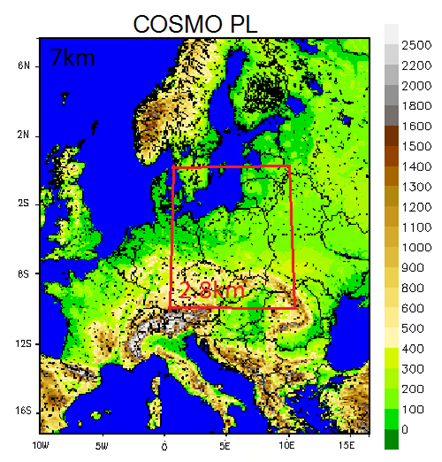

Fig. 1. COSMO Model grids layout at IMWM-NRI.

Last updated: 6 Feb 2018

Contents

IMWM-NRI (Institute of Meteorology and Water Management-National Research Institute) exploits 2 basic COSMO Model applications: on 7km and 2.8km grids spacing respectively. Domain extents, lead times and ensemble sizes are a compromise between users' demands and hardware capabilities.

Fig. 1. COSMO Model grids layout at IMWM-NRI.

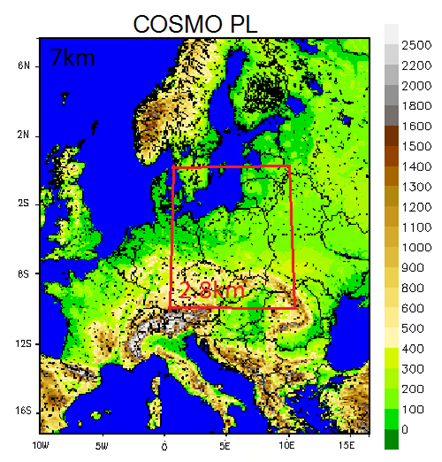

IMWM-NRI operates COSMO Model in version 5.01, on the 7km (0.0625o) grid - code name COSMO-PL7. This Model runs 4 times a day starting at 00, 06, 12 and 18UTC, and providing 84 hours of the forecast lead time.

Operational domain contains 415x460 grid points in horizontal and 40 levels in vertical. The rotated coordinates of computational domain (north pole: at 170oW and 32.5oN) extend from 10oW and 19oS to about 15.9oE and 9.7oN covering mainly Central Europe, parts of Western and Eastern Europe, Baltic Sea and part of Mediterranean Sea (Fig 2).

Fig. 2. COSMO-PL7 domain.

COSMO-PL7 is supplied with BCs from DWD ICON global model. BCs are interpolated with int2lm version 2.01. ICs are provided by Data Assimilation Cycle (see below) or, in case of failure, they are interpolated from DWD ICON model forecast data.

Delay of run relative to real time allows to use data assimilation in first hours of forecast. Data Assimilation cycle which provides ICs for COSMO-PL7 consists of short runs with 6h lead time, performed with 6h hour delay and data assimilation covering full time horizon. Currently we assimilate SYNOP, TEMP, AMDAR, and PILOT data.

The model output is provided in GRIB-1.

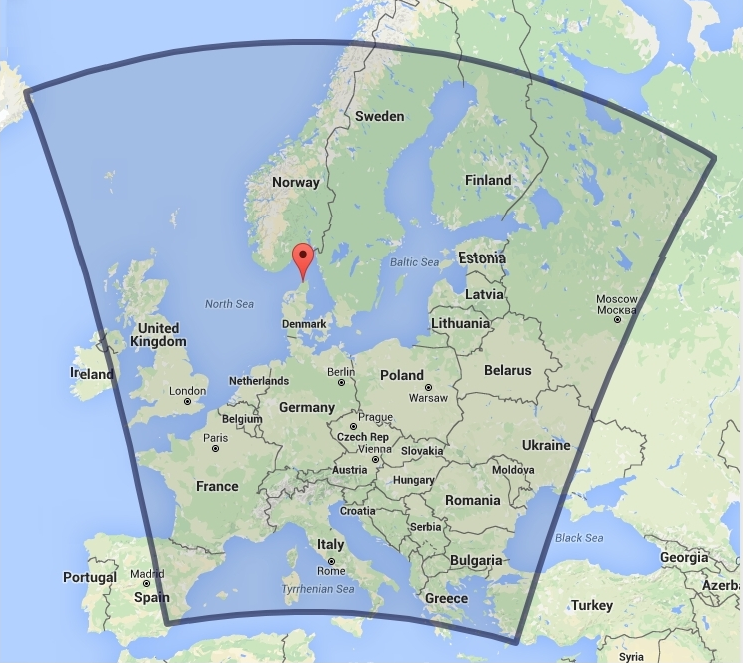

COSMO Model on the 2.8km (0.025o) grid - code name COSMO-PL2.8 - is run at IMWM-NRI's 4 times a day in parallel with COSMO-PL7. Computational domain of COSMO-PL2.8 covers Poland with surrounding area according to forecasts' users' needs (Fig. 3.).

Domain extends from about 0.7oE and 2.4oS to about 10.1oE and 7.7oN (rotated coordinates for north pole 170oW and 40oN), using 380x405 grid points.

Fig. 3. COSMO-PL2.8 domain.

As a source of ICs and BCs for COSMO-PL2.8 we use COSMO-PL7. Input data is interpolated with int2lm version 2.01.

COSMO-PL2.8 is also based on COSMO Model version 5.01 and the output is provided in GRIB-1. As in COSMO-PL7 application, in COSMO-PL2.8 we use data assimilation in first hours of forecast.

COSMO-PL2.8's forecast lead time is currently 48h (value dictated by limited resources).

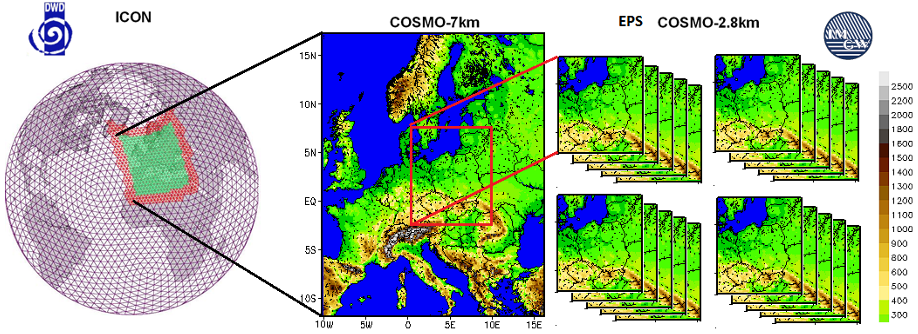

At IMWM-NRI we also run 2.8km in ensemble forecasts, using similar configuration as for COSMO-PL2.8, with I/O limited to necessary minimum and lead time shortened to 36h.

COSMO-PL2.8 TLE is based on the Time Lagged approach where ICs are provided by four consecutive COSMO-PL7 forecasts with different start time. Ensemble consists of 20 members, where perturbations are performed at the lower boundary and applied to soil-atmosphere transfer parameters for water vapor.

COSMO-PL2.8 TLE uses modified version of COSMO Model 5.01, with additional elements related to perturbation mechanism and random generator handling.

Fig. 4. COSMO-PL2.8 TLE

In the table below can be found sample namelists used at IMWM-NRI for applications described above.

| int2lm v2.01 | COSMO v5.01 | |

| COSMO-PL7 | INPUT | INPUT_IO, INPUT_INI, INPUT_ORG, INPUT_DYN, INPUT_PHY, INPUT_ASS, INPUT_DIA, INPUT_SAT, INPUT_EPS |

| COSMO-PL2.8 | INPUT | INPUT_IO, INPUT_INI, INPUT_ORG, INPUT_DYN, INPUT_PHY, INPUT_ASS, INPUT_DIA, INPUT_SAT, INPUT_EPS |

In COSMO-PL7 we use 2 time-level Runge-Kutta scheme with 60s time step. Subgrid scale convection is parametrised with Tiedtke scheme. Turbulence parameterisation of our choice is prognostic TKE-based scheme with default settings. Application uses subgrid scale orography and most of remaining physical parameterisations.

In COSMO-PL2.8 we also use 2 time-level Runge-Kutta scheme with 20s timestep. As a subgrid scale convection parameterization we use shallow convection scheme based on Tiedtke scheme. Turbulence parameterisation used in COSMO-PL2.8 is prognostic TKE-based scheme with default settings. For grid scale precipitation parameterization we use extended basic scheme with cloud water and cloud ice.

Applications described in sections 2-4 above are run on custom HP and Intel based cluster, consisting of 145 HP BL460c servers placed in HP c7000 system. 139 servers of this type are used as computational nodes. Nodes are diskless and each is equipped with 2 Intel Xeon 10-core CPUs (@3GHz), 128GB RAM and 2 InfiniBand network cards.

Cluster connectivity is provided by InfiniBand network and is implemented in Fat Tree architecture, based on HP Blc 4X QDR and Mellanox SX6025 switches. 32TB filesystem is based on HP 3PAR Store Serv 7400 and HP Proliant DL380p.

COSMO-PL7 application runs on 140 cores, a number limited by the input data transfer speed and by our cluster resources. Additional cores are provided for interpolator int2lm parallel runs.

COSMO-PL2.8 application runs on 160 cores, with additional resources for preprocessing of input data provided by COSMO-PL7.

Each member of COSMO-PL2.8 TLE runs on 120 cores.